Was Kunden von Business-Transformation-Lösungen erwarten

Unabhängig davon, in welcher Branche Sie tätig sind, steht die Kundenzufriedenheit an erster Stelle. Denn ohne Kunden kann es kein Geschäft geben! Daher hat die Kundenzufriedenheit für viele Unternehmen oberste Priorität. So drehen sich zahlreiche Studien, Artikel und Berichte um die Frage, was Kunden erwarten und wie Unternehmen diese Erwartungen erfüllen können.

What Customers Want from Business Transformation Solutions

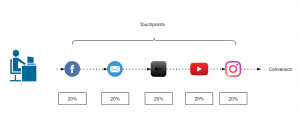

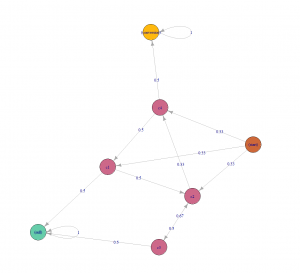

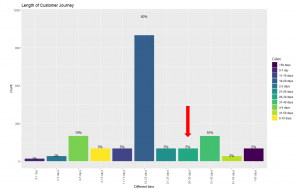

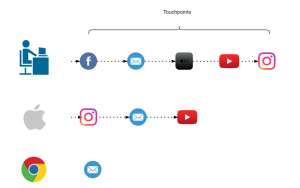

Aus Sicht des Prozessmanagements gibt es bereits eine Technologie, um die Interaktion von Kunden mit Ihrem Unternehmen zu verstehen: Customer Journey Mapping (CJM).). Mithilfe von Customer Journey Mapping können Sie genau nachvollziehen, wie Kunden mit Ihrem Unternehmen agieren und wie ihre Erlebnisse dabei sind. Es hilft bei der Beantwortung von Fragen wie:

- Haben Kunden ein positives oder negatives Gefühl, wenn sie mit bestimmten Prozessen Ihres Unternehmens in Berührung kommen?

- Gibt es Punkte, an denen Kunden nicht weiterkommen oder weiter ziehen oder weitere Informationen wünschen?

- Wie reagieren die Kunden tatsächlich auf Ihre Kundenserviceoptionen?

Neben der internen Beantwortung dieser Fragen gibt es jedoch noch ein wichtigeres und zugleich ganz einfaches Instrument, die Kundenzufriedenheit und -bindung zu verbessern: Fragen Sie einfach Ihre Kunden!

Was Business-Transformation-Kunden erwarten

Dank Technologie können Unternehmen ihre Kunden einfacher als je zuvor direkt zu Produkten und Dienstleistungen befragen. Dabei besteht jedoch die Gefahr, dass Kunden zu häufig kontaktiert werden und sie genau das Gegenteil von dem erreichen, was sie wollten. Darüber hinaus können Einschränkungen bei der Erfassung und Verwendung von Kundendaten dazu führen, dass die tatsächliche Kontaktaufnahme mit Kunden zu einer Herausforderung wird.

Eine Möglichkeit, um diese Hürden zu überwinden, sind online verfügbare technische Bewertungsservices. Diese Websites bieten eine Fülle von Informationen darüber, was Kunden in den unterschiedlichsten Branchen schätzen. Signavio verwendet beispielsweise IT Central Station, um Aufrufe von Kunden zu Business-Transformation-Software zu verfolgen. Wenn wir diese Aufrufe in ihrer Gesamtheit betrachten, fällt auf, dass immer wieder zwei Themen auftauchen: Zusammenarbeit und Benutzerfreundlichkeit.

Dies spiegelt sich auch in den Kommentaren von Benutzern wider:

- „Aus meiner Sicht bietet der Collaboration Hub definitiv die wertvollsten Funktionen. Immer mehr Benutzer nutzen ihn und machen sich damit vertraut.“

- „Nach meiner Erfahrung ist eine der besten Funktionen von Signavio der Collaboration Hub, über den Benutzer aus verschiedenen Abteilungen ständig auf ihr TO-BE-Prozessdesign zugreifen können.“

- „Als wir nach Lösungen suchten, war die Benutzerfreundlichkeit eines der wichtigsten Kriterien. Die Benutzerfreundlichkeit hatte einen großen Einfluss auf die Akzeptanz in unserer Organisation. Wenn die Mitarbeiter mit der Lösung ihre Probleme gehabt hätten, dann hätten sie sie nicht benutzt. Ich würde sagen, die Benutzerfreundlichkeit ist ein ziemlich wichtiger Faktor bei der Entscheidung für eine Lösung.“

- „Eines der wichtigsten Merkmale der Lösung ist die Benutzerfreundlichkeit. Eine wirklich gute Investition. Mitarbeiter wollen Tools, die sie einfach und sofort nutzen können.“

- „Die Oberfläche ist sehr intuitiv. Ich modelliere viele Prozesse und mit diesem Tool ist es für mich ganz einfach.“

Ein letzter Tipp

Um die Bedürfnisse Ihrer Kunden erfüllen und so eine dauerhafte Kundenbeziehung aufbauen zu können, müssen Sie Ihre Kunden verstehen. Und wie so oft spielen auch hier Gefühle eine große Rolle.

Gleiches gilt für die Business Transformation, wie der Lead Business Analyst eines Medienunternehmens mit über 10.000 Mitarbeitern betonte: „Sie haben ein Gefühl dafür, was Sie tun möchten, und dann schauen Sie sich die verfügbaren Tools an und können Ihre Entscheidung umso leichter treffen.“

Sind Sie bereit, sich für die richtige Business-Transformation-Lösung zu entscheiden? Dann registrieren Sie sich noch heute für eine kostenlose 30-Tage-Testversion bei Signavio.