Sentiment Analysis using Python

One of the applications of text mining is sentiment analysis. Most of the data is getting generated in textual format and in the past few years, people are talking more about NLP. Improvement is a continuous process and many product based companies leverage these text mining techniques to examine the sentiments of the customers to find about what they can improve in the product. This information also helps them to understand the trend and demand of the end user which results in Customer satisfaction.

As text mining is a vast concept, the article is divided into two subchapters. The main focus of this article will be calculating two scores: sentiment polarity and subjectivity using python. The range of polarity is from -1 to 1(negative to positive) and will tell us if the text contains positive or negative feedback. Most companies prefer to stop their analysis here but in our second article, we will try to extend our analysis by creating some labels out of these scores. Finally, a multi-label multi-class classifier can be trained to predict future reviews.

Without any delay let’s deep dive into the code and mine some knowledge from textual data.

There are a few NLP libraries existing in Python such as Spacy, NLTK, gensim, TextBlob, etc. For this particular article, we will be using NLTK for pre-processing and TextBlob to calculate sentiment polarity and subjectivity.

The dataset is available here for download and we will be using pandas read_csv function to import the dataset. I would like to share an additional information here which I came to know about recently. Those who have already used python and pandas before they probably know that read_csv is by far one of the most used function. However, it can take a while to upload a big file. Some folks from RISELab at UC Berkeley created Modin or Pandas on Ray which is a library that speeds up this process by changing a single line of code.

After importing the dataset it is recommended to understand it first and study the structure of the dataset. At this point we are interested to know how many columns are there and what are these columns so I am going to check the shape of the data frame and go through each column name to see if we need them or not.

There are so many columns which are not useful for our sentiment analysis and it’s better to remove these columns. There are many ways to do that: either just select the columns which you want to keep or select the columns you want to remove and then use the drop function to remove it from the data frame. I prefer the second option as it allows me to look at each column one more time so I don’t miss any important variable for the analysis.

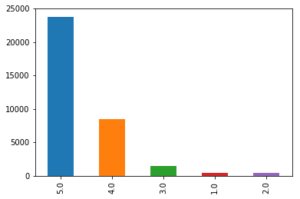

Now let’s dive deep into the data and try to mine some knowledge from the remaining columns. The first step we would want to follow here is just to look at the distribution of the variables and try to make some notes. First, let’s look at the distribution of the ratings.

Graphs are powerful and at this point, just by looking at the above bar graph we can conclude that most people are somehow satisfied with the products offered at Amazon. The reason I am saying ‘at’ Amazon is because it is just a platform where anyone can sell their products and the user are giving ratings to the product and not to Amazon. However, if the user is satisfied with the products it also means that Amazon has a lower return rate and lower fraud case (from seller side). The job of a Data Scientist relies not only on how good a model is but also on how useful it is for the business and that’s why these business insights are really important.

Data pre-processing for textual variables

Lowercasing

Before we move forward to calculate the sentiment scores for each review it is important to pre-process the textual data. Lowercasing helps in the process of normalization which is an important step to keep the words in a uniform manner (Welbers, et al., 2017, pp. 245-265).

Special characters

Special characters are non-alphabetic and non-numeric values such as {!,@#$%^ *()~;:/<>\|+_-[]?}. Dealing with numbers is straightforward but special characters can be sometimes tricky. During tokenization, special characters create their own tokens and again not helpful for any algorithm, likewise, numbers.

Stopwords

Stop-words being most commonly used in the English language; however, these words have no predictive power in reality. Words such as I, me, myself, he, she, they, our, mine, you, yours etc.

Stemming

Stemming algorithm is very useful in the field of text mining and helps to gain relevant information as it reduces all words with the same roots to a common form by removing suffixes such as -action, ing, -es and -ses. However, there can be problematic where there are spelling errors.

This step is extremely useful for pre-processing textual data but it also depends on your goal. Here our goal is to calculate sentiment scores and if you look closely to the above code words like ‘inexpensive’ and ‘thrilled’ became ‘inexpens’ and ‘thrill’ after applying this technique. This will help us in text classification to deal with the curse of dimensionality but to calculate the sentiment score this process is not useful.

Sentiment Score

It is now time to calculate sentiment scores of each review and check how these scores look like.

As it can be observed there are two scores: the first score is sentiment polarity which tells if the sentiment is positive or negative and the second score is subjectivity score to tell how subjective is the text. The whole code is available here.

In my next article, we will extend this analysis by creating labels based on these scores and finally we will train a classification model.

Leave a Reply

Want to join the discussion?Feel free to contribute!