Statistical Relational Learning

An Introduction to Statistical Relational Learning – Part 1

Statistical Relational Learning (SRL) is an emerging field and one that is taking centre stage in the Data Science age. Big Data has been one of the primary reasons for the continued prominence of this relational learning approach given, the voluminous amount of data available now to learn interesting and unknown patterns from data. Moreover, the tools have also improved their processing prowess especially, in terms of scalability.

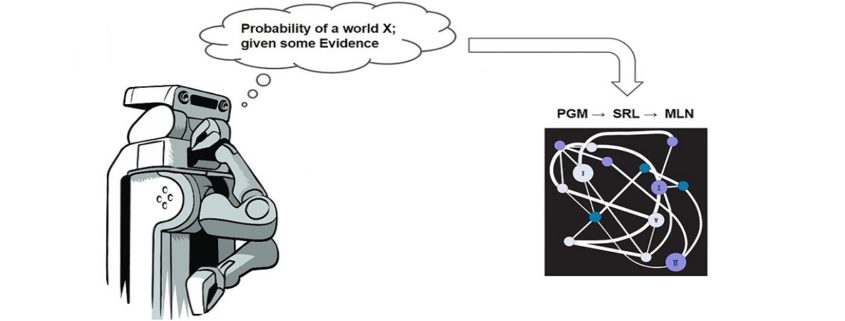

This introductory blog is a prelude on SRL and later on I would also touch base on more advanced topics, specifically Markov Logic Networks (MLN). To start off, let’s look at how SRL fits into one of the 5 different Machine Learning paradigms.

Five Machine Learning Paradigms

Lets look at the 5 Machine Learning Paradigms: Each of which is inspired by ideas from a different field!

- Connectionists as they are called and led by Geoffrey Hinton (University of Toronto & Google and one of the major names in the Deep Learning community) think that a learning algorithm should mimic the brain! After all it is the brain that does all the complex actions for us and, this idea stems from Neuroscience.

- Another group of Evolutionists whose leader is the late John Holland (from the University of Michigan) believed it is not the brain but evolution that was precedent and hence the master algorithm to build anything. And using this approach of having the fittest ones program the future they are currently building 3D prints of future robots.

- Another thought stems from Philosophy where Analogists like Douglas R. Hofstadter an American writer and author of popular and award winning book – Gödel, Escher, Bach: an Eternal Golden Braid believe that Analogy is the core of Cognition.

- Symbolists like Stephen Muggleton (Imperial College London) think Psychology is the base and by developing Rules in deductive reasoning they built Adam – a robot scientist at the University of Manchester!

- Lastly we have a school of thought which has its foundations rested on Statistics & Logic, which is the focal point of interest in this blog. This emerging field has started to gain prominence with the invention of Bayesian networks 2011 by Judea Pearl (University of California Los Angeles – UCLA) who was awarded with the Turing award (the highest award in Computer Science). Bayesians as they are called, are the most fanatical of the lot as they think everything can be represented by the Bayes theorem using hypothesis which can be updated based on new evidence.

SRL fits into the last paradigm of Statistics and Logic. As such it offers another alternative to the now booming Deep Learning approach inspired from Neuroscience.

Background

In many real world scenario and use cases, often the underlying data is assumed to be independent and identically distributed (i.i.d.). However, real world data is not and instead consists of many relationships. SRL as such attempts to represent, model, and learn in the relational domain!

There are 4 main Models in SRL

- Probabilistic Relational Models (PRM)

- Markov Logic Networks (MLN)

- Relational Dependency Networks (RDN)

- Bayesian Logic Programs (BLP)

It is difficult to cover all major models and hence the focus of this blog is only on the emerging field of Markov Logic Networks.

MLN is a powerful framework that combines statistics (i.e. it uses Markov Random Fields) and logical reasoning (first order logic).

Academia

Some of the prominent names in academic and the research community in MLN include:

- Professor Pedro Domingos from the University of Washington is credited with introducing MLN in his paper from 2006. His group created the tool called Alchemy which was one of the first, First Order Logic tools.

- Another famous name – Professor Luc De Raedt from the AI group at University of Leuven in Belgium, and their team created the tool ProbLog which also has a Python Wrapper.

- HAZY Project (Stanford University) led by Prof. Christopher Ré from the InfoLab is doing active research in this field and Tuffy, Felix, Elementary, Deep Dive are some of the tools developed by them. More on it later!

- Talking about academia close by i.e. in Germany, Prof. Michael Beetz and his entire team moved from TUM to TU Bremen. Their group invented the tool – ProbCog

- At present, Prof. Volker Tresp from Ludwig Maximilians University (LMU), Munich & Dr. Matthias Nickles at Technical University of Munich (TUM) have research interests in SRL.

Theory & Formulation

A look at some background and theoretical concepts to understand MLN better.

A. Basics – Probabilistic Graphical Models (PGM)

The definition of a PGM goes as such:

A PGM encodes a joint p(x,y) or conditional p(y|x) probability distribution such that given some observations we are provided with a full probability distribution over all feasible solutions.

A PGM helps to encode relationships between a set of random variables. And it achieves this by making use of a graph! These graphs can be either be Directed or Undirected Graphs.

B. Markov Blanket

A Markov Blanket is a Directed Acyclic graph. It is a Bayesian network and as you can see the central node A highlighted in red is dependent on its parents and parents of descendents (moralization) by the circle drawn around it. Thus these nodes are the only knowledge needed to predict node A.

C. Markov Random Fields (MRF)

A MRF is an Undirected graphical model. Every node in an MRF satisfies the Local Markov property of Conditional Independence, i.e. a node is conditionally independent of another node, given its neighbours. And now relating it to Markov Blanket as explained previously, a Markov blanket for a node is simply its adjacent nodes!

Intuition

We now that Probability handles uncertainty whereas Logic handles complexity. So why not make use of both of them to model relationships in data that is both uncertain and complex. Markov Logic Networks (MLN) precisely does that for us!

MLN is composed of a set of pairs of <w, F> where F is the formula (written in FO logic) and weights (real numbers identifying the strength of the constraint).

MLN basically provides a template to ground a Markov network. Grounding would be explained in detail in the next but one section on “Weight Learning”.

It can be defined as a Log linear model where probability of a world is given by the weighted sum of all true groundings of a formula i under an exponential function. It is then divided by Z which is termed as the partition function and used to normalize and get probability values between 0 and 1.

The MLN Template

Rules or Predicates

The relation to be learned is expressed in FO logic. Some of the different possible FO logical connectives and quantifiers are And (^), Or (V), Implication (→), and many more. Plus, Formulas may contain one or more predicates, connected to each other with logical connectives and quantified symbols.

Evidence

Evidence represent known facts i.e. the ground predicates. Each fact is expressed with predicates that contain only constants from their corresponding domains.

Weight Learning

Discover the importance of relations based on grounded evidence.

Inference

Query relations, given partial evidence to infer a probabilistic estimate of the world.

More on Weight Learning and Inference in the next part of this series!

Hope you enjoyed the read. I have deliberately kept the content basic and a mix of non technical and technical so as to highlight first the key players and some background concepts and generate the reader’s interest in this topic, the technicalities of which can easily be read in the paper. Any feedback as a comment below or through a message are more than welcome!

Continue reading with Statistical Relational Learning – Part II.

References

- Richardson, Matthew, and Pedro Domingos – Markov logic networks. In Machine learning, vol. 62, no. 1-2, pp. 107-136, 2006.

- Pedro’s TEDx video at University of Washington: The Quest for the Master Algorithm

- Hazy project webpage